Distributed locking

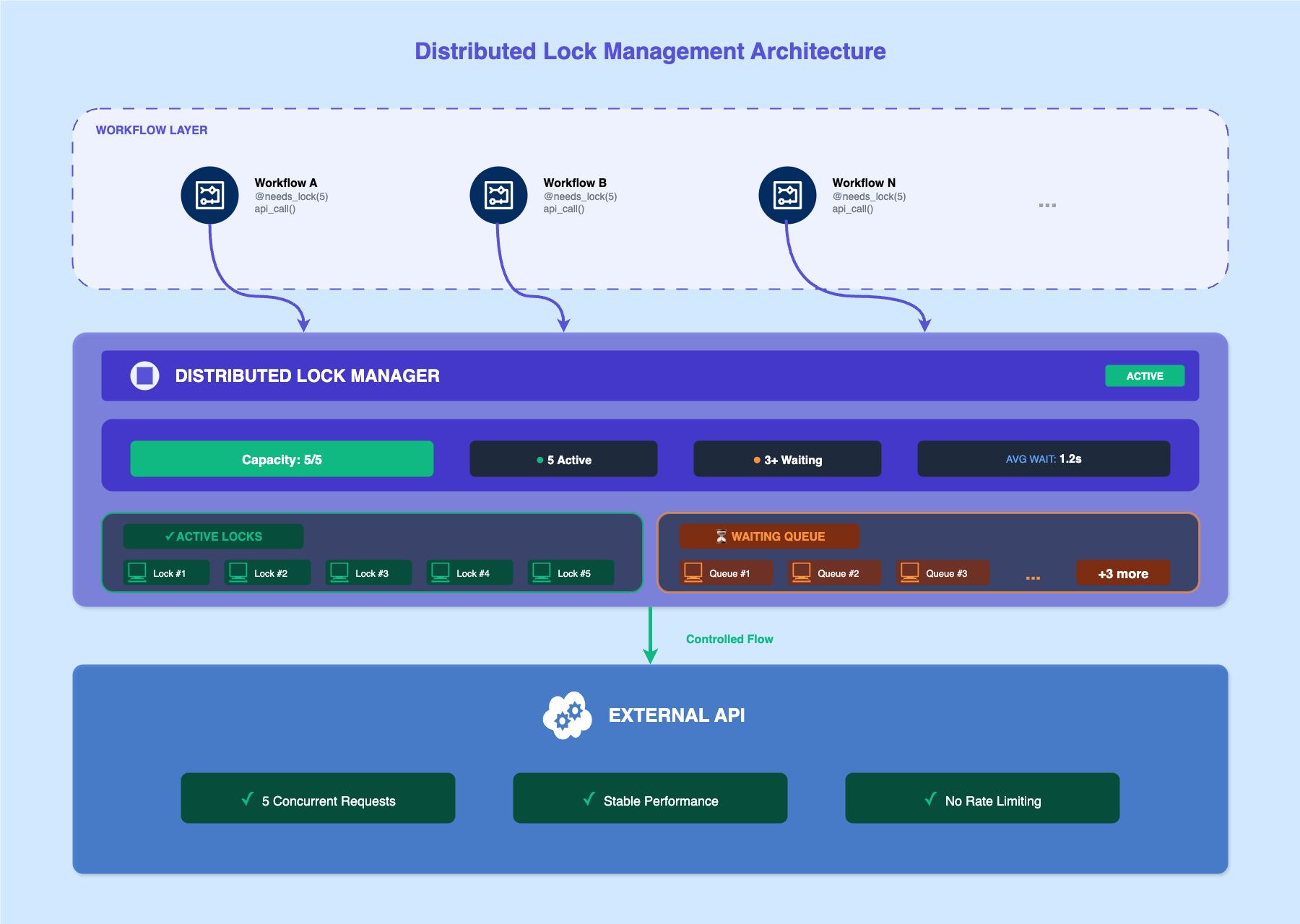

Distributed locking coordinates resource access across multiple workflow instances running on different nodes in your Atlan applications. This coordination prevents race conditions, implements rate limiting, and ensures stable performance when your applications access shared resources like external APIs, databases, or file systems.

The Application SDK provides a @needs_lock decorator that automatically manages lock acquisition and release around Temporal activity execution.

Why distributed locking matters

Atlan applications run as Temporal workflows where activities execute independently on different worker nodes across your infrastructure. Each worker node operates without inherent knowledge of what other nodes are doing. While this distributed architecture provides scalability and fault tolerance, it creates coordination challenges when multiple workflow instances access shared resources simultaneously.

When multiple workflows run concurrently without coordination, you encounter three primary problems:

API rate limit violations

Multiple workflows calling the same external API simultaneously can exceed rate limits and cause HTTP 429 throttling errors.

Database connection exhaustion

Heavy concurrent queries from multiple workflows can exhaust database connection pools and degrade query performance.

File system bottlenecks

Multiple workflows processing large files simultaneously create I/O bottlenecks that slow down all operations.

Without coordination, you experience unpredictable failures, resource exhaustion, performance degradation, and cascading failures that are difficult to troubleshoot.

How distributed locking works

The Application SDK implements a slot-based locking approach that distributes contention across multiple Redis keys. Each lock configuration creates a pool of available "slots" (independent Redis keys). When an activity needs to execute, it randomly selects a slot and attempts to acquire it. If the slot is available, the activity proceeds. If occupied, the system retries with a different random slot until one becomes available or the workflow timeout is reached.

The locking system integrates with Temporal workflows through an interceptor pattern. Temporal interceptors provide hooks into the workflow execution lifecycle, allowing the Application SDK to detect activities that require locking and orchestrate coordination transparently.

When you use the @needs_lock decorator on an activity, the system:

- Detects the decorator when the activity is scheduled

- Acquires a distributed lock before activity execution

- Executes your business logic with the acquired lock

- Releases the lock after activity completion or failure

The lock acquisition process generates a unique owner ID ({APPLICATION_NAME}:{workflow_run_id}), selects a random slot, and attempts to acquire it using Redis SET with NX (not exists) and EX (expiration) flags. Lock release executes as an atomic Lua script that verifies ownership before releasing.

The system automatically calculates lock TTL (Time To Live) based on your activity's schedule_to_close_timeout configuration. This automatic TTL management prevents deadlocks by ensuring locks are eventually released even if an activity times out or fails.

Redis keys follow a hierarchical structure: {APPLICATION_NAME}:{lock_name}:{slot}. This structure isolates different applications, groups related operations, and provides clear ownership patterns for debugging.

See also

- @needs_lock decorator: Decorator parameters and usage patterns

- Configure distributed locking: Set up Redis and environment configuration

- Troubleshoot distributed locking: Common issues and solutions