Test your apps

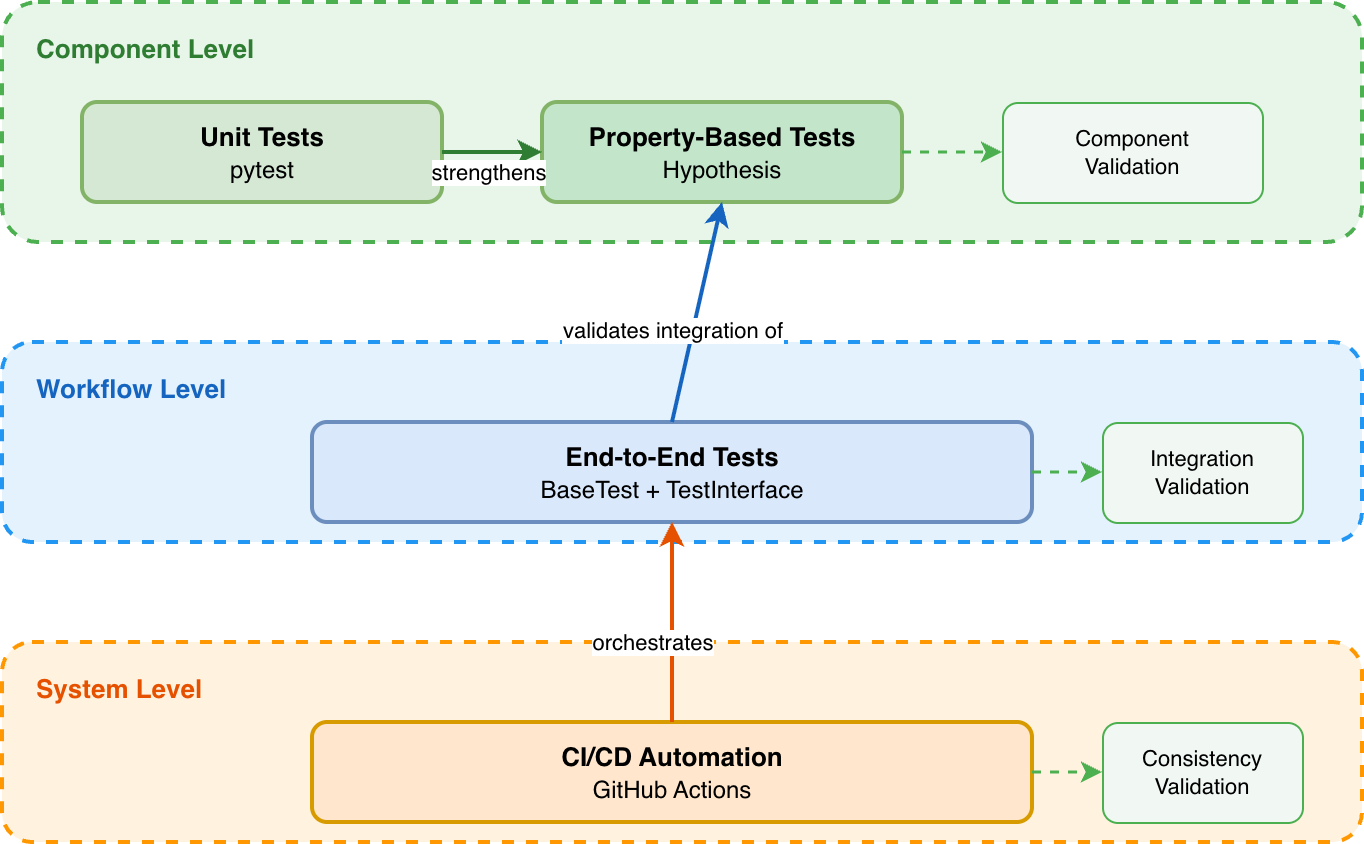

The Application SDK testing framework validates your applications through multiple testing approaches that catch different types of issues. This validation catches bugs from component logic errors through integration issues before they reach production, ensuring your applications work correctly when components interact in workflows, when unusual inputs trigger edge cases, and when code changes introduce regressions.

Core capabilities

Unit testing

Validates individual components in isolation using pytest and mocks, providing fast feedback in milliseconds during development.

- Isolated component testing with mocked dependencies

- Async test support with AsyncMock

- Fast execution for rapid iteration

Property-based testing

Automatically generates diverse test cases using Hypothesis to discover edge cases that manual testing misses.

- Automatic test case generation

- Input shrinking for minimal failure examples

- Reusable strategies for SDK components

End-to-end testing

Validates complete workflows from start to finish using shared base classes that handle infrastructure concerns.

- Complete workflow validation

- Configuration-driven test scenarios

- Schema-based data validation with Pandera

CI/CD automation

Automates test execution through GitHub Actions, ensuring consistent quality gates on every code change.

- Automatic test execution on pull requests

- Coverage threshold enforcement

- Standardized test environments

How test framework works

The framework provides four complementary testing approaches, each operating at different levels of your application. Unit tests validate individual components in isolation, property-based tests explore edge cases automatically, end-to-end tests validate complete workflows, and automation runs tests consistently throughout development.

Unit testing

The framework uses pytest for unit testing and provides AsyncMock for mocking asynchronous operations. Unit tests validate individual components in isolation.

-

Component validation: Tests validate individual components by replacing external dependencies with mocks. This isolation enables fast execution and deterministic results because tests never wait for network calls, database queries, or file operations.

-

pytest framework: The framework standardizes unit testing with pytest, which provides test discovery, fixtures for common setup, and assertions for validation. Tests follow pytest conventions for organization and execution.

-

AsyncMock support: The framework provides

AsyncMockfor mocking asynchronous operations. When your component awaits a database query or API call, the mock properly returns awaitable objects that integrate with Python's async/await syntax. -

Test organization: Tests mirror source code structure for easy navigation. If your handler lives in

application_sdk/handlers/metadata.py, tests live intests/unit/handlers/test_metadata.py. This parallel structure makes finding tests intuitive. -

Success and failure paths: Tests validate both expected behavior with valid inputs and error handling with invalid inputs. The framework uses

pytest.raisesto verify that components properly raise exceptions for invalid inputs rather than producing incorrect results silently.

Property-based testing

The framework integrates Hypothesis for property-based testing, which automatically generates diverse test cases instead of requiring manually written examples. Property-based tests discover edge cases that manual testing misses.

-

Edge case discovery: Instead of writing specific test examples with particular inputs, you describe properties that hold true. Hypothesis generates test cases automatically: empty strings, very long strings, unicode characters, special symbols, whitespace, and combinations you wouldn't think to test manually. This systematic exploration of the input space discovers bugs that example-based testing misses.

-

Automatic test generation: The framework uses Hypothesis to generate hundreds of diverse test cases based on properties you define. You specify invariants like "the result is lowercase" or "the function is idempotent," and Hypothesis explores the input space to find violations.

-

Input shrinking: When Hypothesis finds a failure, it automatically simplifies the input to find the minimal example that triggers the bug. If a test fails with a 500-character string containing special characters, Hypothesis tries progressively simpler inputs until it identifies the smallest input that still fails. This shrinking process helps identify the root cause by reducing complex failures to simple, understandable examples.

-

Reusable strategies: The framework provides reusable strategies in

application_sdk/test_utils/hypothesis/strategiesthat define how to generate valid test data for SDK components. Strategies describe data structure and constraints. A credential strategy generates test data with all required fields, appropriate types, and valid values. You compose simple strategies into complex ones, building realistic test data that matches your application's requirements.

End-to-end testing

The framework provides base classes for end-to-end testing that validate complete workflows from start to finish. End-to-end tests catch integration issues that unit tests can't discover: problems where individual components work correctly in isolation but fail when combined.

-

Workflow validation: End-to-end tests exercise complete workflows from API calls through data processing to output validation. Tests run applications with realistic inputs and verify correct outputs, catching problems like components making incompatible assumptions, configuration errors, or workflow coordination failures.

-

TestInterface base class:

TestInterfaceprovides the abstract foundation that all E2E tests build on. It handles infrastructure concerns that every test needs: automatically discoveringconfig.yamlfiles, parsing YAML configuration, initializingAPIServerClientfor API interactions, and setting up test configuration attributes. The class defines abstract methods for health checks and workflow execution that concrete tests implement for their specific scenarios. -

BaseTest implementation:

BaseTestextendsTestInterfacewith a standard implementation of common E2E operations. It defines the order in which tests are performed and implements methods for metadata fetching, preflight checks, workflow execution, and result verification. Most tests inherit from BaseTest unchanged, getting this standard workflow for free. -

APIServerClient communication:

APIServerClienthandles low-level HTTP communication with your application server. It formats API requests, makes HTTP calls, processes JSON responses, and validates status codes. This component isolates protocol details from test logic. Tests work with high-level methods likerun_workflow()without worrying about HTTP headers or JSON serialization.

Configuration-driven testing

E2E tests separate test logic from test data by using configuration files. Test logic (how to execute workflows and validate results) lives in code that rarely changes. Test data (credentials, filters, expected responses) lives in configuration that changes frequently.

This separation creates maintainability. When credentials change or you need to test new scenarios, you edit configuration files rather than code. Non-developers can define test scenarios by writing YAML rather than Python. The same test logic handles multiple scenarios with different configurations.

Schema-based validation

The framework validates data structure and quality using Pandera schemas. Schemas define expectations: which columns exist, what types they have, what values are valid, how many records you expect. When workflows produce data, tests validate it with schemas to catch data quality issues.

This validation catches integration problems that unit tests miss. A component might correctly implement its logic but produce data in the wrong format, with missing columns, or with values outside expected ranges. Schema validation detects these structural and quality issues in the integration layer.

Automation

The framework integrates with GitHub Actions to automate test execution throughout the development lifecycle. Automation runs tests consistently on every code change with standardized environments.

-

Consistent execution: The framework provides GitHub Actions that run tests automatically on every pull request and commit. Tests execute in standardized environments with controlled dependencies, producing consistent results regardless of developer local setups. This consistency catches environment-specific issues before they reach production.

-

Unit test automation: The framework includes a GitHub Action at

.github/actions/unit-tests/action.yamlthat runs unit tests, generates coverage reports, posts results as pull request comments, and optionally uploads reports to storage. This automation provides rapid feedback on code changes. -

E2E test automation: The framework includes a GitHub Action at

.github/actions/e2e-apps/action.yamlthat executes end-to-end workflows. The action provides access to credentials from GitHub secrets securely and validates application behavior in realistic scenarios with actual data processing. -

Coverage enforcement: The framework uses coverage as an enforced quality metric. Tests fail if overall code coverage falls below configured thresholds, preventing coverage from decreasing as the application evolves. Coverage reports appear in pull requests, making test coverage visible during code review and catching coverage gaps before code merges.

See also

- Set up E2E tests - Create end-to-end tests for your application workflows

- Test configuration - Complete reference for test configuration options and guidelines

- Handlers - Understand handlers that you test in your application