Set up Databricks

This guide walks through configuring Databricks to work with Atlan's data quality studio by creating the required service principal, setting up authentication, and granting the necessary privileges. Atlan recommends using serverless SQL warehouses for instant compute availability.

Some capabilities shown here may require additional enablement or licensing. Contact your Atlan representative for details.

System requirements

Before setting up the integration, make sure you meet the following requirements:

- Databricks Premium or Enterprise edition

- Serverless Compute for Jobs & Notebooks enabled

- Dedicated SQL warehouse for running DQ-related queries

Before you begin

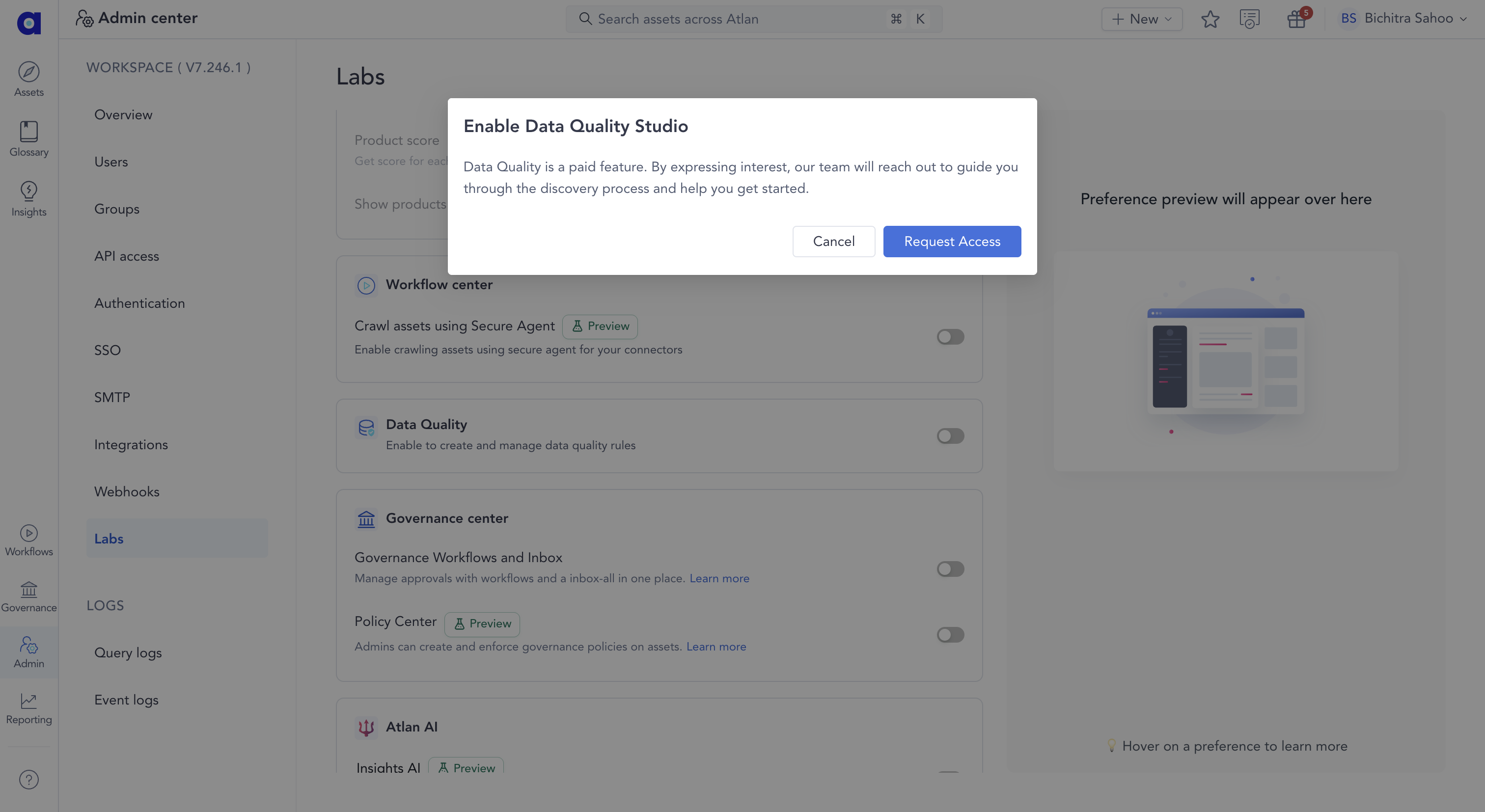

Data Quality Studio is a paid feature that requires access. You can request access by following the steps below so the team can enable it for you, while you continue setting up Databricks.

- Navigate to Admin > Labs in Atlan

- Turn on the Data Quality toggle

- Click Request Access when the popup appears

After you request access, the Atlan team reviews and grants access, after which you can proceed to enable Data Quality for Databricks.

Prerequisites

Before you begin, complete the following steps:

- Obtain Workspace admin and Metastore Admin or CREATE CATALOG privilege

- Identify your dedicated SQL warehouse for DQ operations

- Review Data Quality permissions to understand required privileges

Create service principal

Create the service principal that Atlan uses to perform Data Quality (DQ) operations within your Databricks workspace.

-

Follow the appropriate guide based on your Databricks deployment environment:

- Creating a Service Principal in AWS based Databricks Accounts

- Creating a Service Principal in Azure based Databricks Accounts

Once the service principal is created, you can assign it to your workspace by following the instructions in the guide.

-

Store the following credentials securely:

client_idclient_secrettenant_id(Azure only)- Service principal name

Atlan recommends naming it:

atlan-dq-service-principal -

Set up authentication: Choose one of the following authentication methods for your service principal:

OAuth (Recommended):

- Use the

client_id,client_secret, andtenant_id(Azure only) from the service principal created in the previous step - No additional configuration required

Personal access token (pat):

- Follow the Databricks Personal Access Token guide to generate a token for the service principal

- Store the token securely for use in the next steps

- Use the

-

Grant warehouse access: Grant the service principal access to a SQL warehouse that's used to run Data Quality queries.

- Go to your Databricks workspace UI

- Navigate to SQL > SQL Warehouses

- Click on the warehouse you want Atlan to use

- Click on the Permissions button

- Select the Service Principal (

atlan-dq-service-principal) from the list - Assign the Can Use permission

- Click Add

Once access is granted, Atlan can use this warehouse to run SQL queries related to Data Quality operations.

Set up Databricks objects

Create the required Databricks objects needed for the functioning of the Atlan Data Quality Studio.

Create the atlan_dq catalog

Atlan stores data quality rule metadata, data quality rule execution results, and internal processing tables for each data quality connection in a catalog within your Databricks environment.

If you want to enable data quality for multiple Atlan connections within the same Unity Catalog metastore, create a separate, uniquely named catalog for each connection. A dedicated catalog is required for each Atlan connection. You can replace the name atlan_dq in the following steps with the name of your catalog.

Run the following SQL command in a Databricks notebook or SQL editor:

CREATE CATALOG IF NOT EXISTS atlan_dq;

Grant privileges

Grant the following privileges to atlan-dq-service-principal so it can create internal objects, read the Atlan API token, and query data for quality checks. Replace placeholders with real values.

-

Manage the

atlan_dqcatalogGRANT USE CATALOG ON CATALOG atlan_dq TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

GRANT CREATE SCHEMA ON CATALOG atlan_dq TO '<SERVICE_PRINCIPAL_CLIENT_ID>'; -

Access data for quality checks (choose one scope)

Catalog level

GRANT USE CATALOG ON CATALOG <CATALOG> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

GRANT USE SCHEMA ON CATALOG <CATALOG> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

GRANT SELECT ON CATALOG <CATALOG> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';Schema level

GRANT USE CATALOG ON CATALOG <CATALOG> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

GRANT USE SCHEMA ON SCHEMA <SCHEMA> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

GRANT SELECT ON SCHEMA <SCHEMA> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';Table level

GRANT USE CATALOG ON CATALOG <CATALOG> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

GRANT USE SCHEMA ON SCHEMA <SCHEMA> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

GRANT SELECT ON TABLE <TABLE> TO '<SERVICE_PRINCIPAL_CLIENT_ID>';

These grants let Atlan create its internal schemas and run SELECT queries needed for rule execution.

Next steps

- Enable data quality on connection - Configure your Databricks connection for data quality monitoring

Need help

If you have questions or need assistance with setting up Databricks for data quality, reach out to Atlan Support by submitting a support request.

See also

- Configure alerts for data quality rules - Set up real-time notifications for rule failures