Build custom app

This lesson guides you through building a custom Atlan application from scratch, applying all the patterns and tools you learned in the previous chapters. Think of this as moving from touring model homes to building your own custom home. You take everything you've learned and create something entirely your own.

What you learn here: How to build a local extractor that processes JSON files and converts the data into Atlan’s standardized format. By the end, you have a working extractor application.

Before you begin

Before you start, make sure you have:

- Completed lesson 1: Set up your development environment

- Completed lesson 2: Run your first sample app

Core concepts you apply

Before you start building, understand what your application demonstrates. Think of these concepts as the key systems in the house you're about to construct:

Process Files

Your application reads JSON files containing Table metadata and validates the content structure before processing.

Transform Data

Convert raw metadata into Atlan's standardized format with owner lists, certificates, and hierarchical relationships.

Manage Resources

Implement proper separation of concerns with handlers managing SDK interactions and clients handling file operations.

Web Interface

Use the template's web interface to test your extractor and monitor processing status.

Now that you understand what you're building, time to create your project structure and see these concepts work together in a real application.

Create your project workspace

Time to build your own application! You are going to create a local file extractor using the template from the sample apps repository you worked with in Run your first sample app lesson. This gives you a proven foundation to build upon.

-

Create your project directory: Create a dedicated directory to organize your extractor application code. This directory separates your extractor code from other projects and becomes your workspace:

mkdir atlan-local-file-extractor-app

cd atlan-local-file-extractor-app -

Copy the template structure: The generic template contains the required files and structure for an Atlan application. It sets up the correct organization, configurations, and placeholder files for your extractor:

cp -r ../atlan-sample-apps/templates/generic/ . -

Create your Python environment: A virtual environment keeps your project dependencies separate. This prevents package version conflicts between different Python projects and maintains consistent behavior:

uv venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate

What you just created

You now have a complete application workspace on your computer. This is the same template structure you used in Run your first sample app, but now it's yours to customize for file extraction.

Explore your project structure

📁 atlan-local-file-extractor-app/

├── 📄 main.py # Application entry point

├── 📄 pyproject.toml # Python project configuration

├── 📁 app/

│ ├── 📄 __init__.py # Package initialization

│ ├── 📄 activities.py # File processing activities

│ ├── 📄 workflow.py # Extraction workflow orchestration

│ ├── 📄 client.py # File I/O operations

│ ├── 📄 handler.py # SDK interface

│ ├── 📁 templates/ # project templates

│ ├── 📄 workflow.json # Workflow configuration template

└── 📁 tests/ # Test files

Each file has a specific purpose in making your extraction application work properly.

With your workspace ready, the next step is customizing the application components to handle file extraction. Just like a contractor following blueprints to build specific rooms, you customize each component for your file processing needs.

✨ Your workspace configured! You have the SDK installed and project structure ready for customization.

Build your workflow orchestration

Time to customize your workflow for file extraction! Your workflow defines the sequence of operations that transform raw JSON files into standardized metadata. You are going to modify the template's workflow to handle file processing.

💻 Open app/workflow.py. The template provides the workflow structure with retry policies and the get_workflow_args activity. Add the extract_and_transform_metadata activity call (lines 32–37) and update get_activities (line 47) to register it. Here's the complete file:

from datetime import timedelta

from typing import Any, Callable, Dict, Sequence

from app.activities import ActivitiesClass

from application_sdk.activities import ActivitiesInterface

from application_sdk.workflows import WorkflowInterface

from temporalio import workflow

from temporalio.common import RetryPolicy

@workflow.defn

class WorkflowClass(WorkflowInterface):

@workflow.run

async def run(self, workflow_config: Dict[str, Any]) -> None:

"""Orchestrate the workflow summary flow."""

activities_instance = ActivitiesClass()

# Configure retry policy for resilient activity execution

retry_policy = RetryPolicy(

maximum_attempts=6, # 1 initial attempt + 5 retries

backoff_coefficient=2,

)

# Merge any provided args (from frontend POST body or server config)

workflow_args: Dict[str, Any] = await workflow.execute_activity_method(

activities_instance.get_workflow_args,

workflow_config,

retry_policy=retry_policy,

start_to_close_timeout=timedelta(seconds=10),

)

# Extract and Transform the metadata

extraction_result: Dict[str, Any] = await workflow.execute_activity_method(

activities_instance.extract_and_transform_metadata,

workflow_args,

retry_policy=retry_policy,

start_to_close_timeout=timedelta(seconds=30),

)

@staticmethod

def get_activities(activities: ActivitiesInterface) -> Sequence[Callable[..., Any]]:

"""

Declare which activity methods are part of this workflow for the worker.

"""

if not isinstance(activities, ActivitiesClass):

raise TypeError("Activities must be an instance of ActivitiesClass")

return [activities.get_workflow_args, activities.extract_and_transform_metadata]

Seeing a type error on

extract_and_transform_metadata? Your editor shows an error because this activity doesn't exist inactivities.pyyet. This is expected — you define it in the next section, and the error disappears once you implement the activity.

What you just built

The workflow itself doesn't process files. Instead, it runs every step in the right order, with Temporal managing error handling and retries. What was once just a template is now the conductor that orchestrates your extractor.

- Temporal integration: Uses the

@workflow.defndecorator andWorkflowInterfaceinheritance to connect with Temporal. - Retry policy: Configures automatic retries with exponential backoff. If an activity fails due to transient errors (network issues, timeouts), Temporal automatically retries up to 6 times with increasing delays between attempts.

- Run method: Defines a

runmethod that accepts configuration and drives the workflow. - Activity orchestration: Instead of a simple "hello world" activity, you're chaining two real steps:

get_workflow_args: gathers configuration.extract_and_transform_metadata: processes files and transforms data.

- Timeouts: Adds a 30-second timeout to file processing since it takes longer than trivial operations.

- Activity registration: Updates

get_activitiesto register your custom extraction activities instead of placeholders.

✨ Orchestration layer complete! Your workflow can now sequence activities with proper timeout management.

Implement your extraction activities

Your workflow can now orchestrate activities, but what activities does it run? Time to create the extraction logic! Activities contain the business logic for reading files and transforming data. Your workflow calls these activities in sequence to complete the extraction process.

💻 Open app/activities.py and replace all template code with the snippet below. Pay attention to lines 17–74, that’s where your custom logic sits.

import json

import os

from typing import Any, Dict

from application_sdk.activities import ActivitiesInterface

from application_sdk.observability.logger_adaptor import get_logger

from temporalio import activity

from .handler import HandlerClass

logger = get_logger(__name__)

activity.logger = logger

class ActivitiesClass(ActivitiesInterface):

"""Activities for the Extractor app using the handler/client pattern."""

def __init__(self, handler: HandlerClass | None = None):

self.handler = handler or HandlerClass()

@activity.defn

async def extract_and_transform_metadata(

self, config: Dict[str, Any]

) -> Dict[str, Any]:

"""Extract and transform Table metadata from JSON file."""

input_file = output_file = ""

try:

if not self.handler or not self.handler.client:

raise ValueError("Handler or extractor client not initialized")

output_file = config.get("payload").get(

"output_file", "transformed_tables.json"

)

input_file = config.get("payload").get(

"input_file", "extractor-app-input-table.json"

)

if not os.path.exists(input_file):

raise FileNotFoundError(f"File not found: {input_file}")

raw_data = json.load(self.handler.client.create_read_handler(input_file))

transformed_data = []

for item in raw_data:

if item.get("Type") == "Table":

# Process owner users - split by newline if present

owner_users_str = item.get("Owner_Users", "")

owner_users = owner_users_str.split("\n") if owner_users_str else []

# Process owner groups - split by newline if present

owner_groups_str = item.get("Owner_Groups", "")

owner_groups = owner_groups_str.split("\n") if owner_groups_str else []

transformed_data.append(

{

"typeName": "Table",

"name": item.get("Name", ""),

"displayName": item.get("Display_Name", ""),

"description": item.get("Description", ""),

"userDescription": item.get("User_Description", ""),

"ownerUsers": owner_users,

"ownerGroups": owner_groups,

"certificateStatus": item.get("Certificate_Status", ""),

"schemaName": item.get("Schema_Name", ""),

"databaseName": item.get("Database_Name", ""),

}

)

with open(output_file, "w", encoding="utf-8") as file:

for item in transformed_data:

json.dump(item, file, ensure_ascii=False)

file.write("\n")

return {"status": "success", "records_processed": len(transformed_data)}

except Exception as e:

logger.error(

f"Failed to extract and transform table metadata: {e}", exc_info=True

)

raise

finally:

if self.handler and self.handler.client:

self.handler.client.close_file_handler()

What you just built

This activity code performs the heavy lifting: it reads raw metadata and transforms it into a format Atlan understands, and this is where the real work of your extractor happens:

- Temporal integration: Uses the

@activity.defndecorator to define activities that can be called from the workflow. - Separation of concerns: Follows a handler/client pattern for cleaner structure, with built-in logging and error handling.

- Configuration handling: Keeps the

get_workflow_argsactivity to merge parameters, adapted from the template. - File validation: Adds existence checks before processing, so failures are clear and fast.

- JSON processing: Uses the client’s file handler (coming next) to safely read JSON data, replacing placeholder logic.

- Owner list transformation: Splits newline-separated owners (

"jsmith\njdoe") into arrays (["jsmith", "jdoe"]). - Data transformation loop: Filters for Table assets and maps fields from your source format to Atlan’s standard format.

- Output generation: Writes transformed data as newline-delimited JSON (NDJSON), optimized for streaming large datasets.

- Resource cleanup: Closes files properly, even when errors occur.

✨ Data transformation ready! Your extractor can parse JSON and convert owner lists from newline-separated strings to arrays.

Create your client for file operations

Your activity knows WHAT to do, but it needs help with HOW to safely read files. Time to build the client that handles file operations! The client manages file I/O operations with proper resource handling. Your activity will use this client to read JSON files safely and efficiently.

💻 Open app/client.py and replace all template code with the snippet below. Pay attention to lines 14-34, that’s where your custom logic sits.

import os

from typing import Optional, TextIO

from application_sdk.observability.logger_adaptor import get_logger

logger = get_logger(__name__)

class ClientClass:

"""Client for handling JSON file operations and resource management."""

def __init__(self):

# Template: Initialize file handler attribute

self.file_handler: Optional[TextIO] = None

# ========== ✨ CUSTOM METHODS ADDED ==========

def create_read_handler(self, file_path: str) -> TextIO:

"""Create and return a file handler for reading JSON files."""

if not os.path.exists(file_path):

raise FileNotFoundError(f"File not found: {file_path}")

if self.file_handler:

self.close_file_handler()

self.file_handler = open(file_path, 'r', encoding='utf-8')

logger.info(f"Created file handler for: {file_path}")

return self.file_handler

def close_file_handler(self) -> None:

"""Close the current file handler and clean up resources."""

if self.file_handler:

self.file_handler.close()

self.file_handler = None

logger.info("File handler closed successfully")

# ========== ✨ END CUSTOM METHODS ==========

What you just built

The client abstracts file operations away from your activities. If you later switch from local files to cloud storage or a database, you only update this client. Your activities stay unchanged. The separation keeps your extractor clean, maintainable, and testable:

- Class structure: Defines a

ClientClasswith logging and clear initialization, ready for extension. - File opening with safety: The

create_read_handlermethod adds critical protections:- Validates file existence before opening.

- Cleans up previously opened files to avoid leaks.

- Uses UTF-8 encoding to handle international characters.

- Resource cleanup: The

close_file_handlermethod closes files and resets the handler, preventing exhaustion of file handles.

✨ Resource management implemented! Files are now handled safely with automatic cleanup, even during errors.

Create your handler interface

Now you have activities that know what to do and a client that knows how to do it safely. But how does the outside world communicate with your extractor? The handler is the bridge! It provides the SDK interface between the web interface and your business logic, coordinating between the frontend, SDK, and your client.

💻 Review app/handler.py to see how the handler bridges your components:

What the handler provides

The handler acts as your application's SDK interface, and the template version includes everything your extractor needs:

- Initializes with your

ClientClassto make it available to activities - Manages credentials passed from the SDK

- Performs connectivity and preflight checks before processing

- Inherits from

HandlerInterfacefor SDK compatibility

The template handler works as-is because your file extraction happens in activities, not in the handler. The handler just needs to bridge the SDK with your client, which the template already does perfectly.

✨ SDK bridge connected! Your handler links the web interface to your extraction logic. Next, build the web interface to complete your extractor.

Build your web interface

Your extractor has all the backend logic, but how do users interact with it? Time for the frontend! The web interface allows users to specify file paths and monitor processing. It demonstrates how configuration flows from the UI through your handler, to the workflow, and finally to your activities.

🧩 Setup your frontend playground

# Create frontend directory in the project directory

mkdir -p frontend/static

# Install playground tool

npx @atlanhq/app-playground install-to frontend/static

💻 Create frontend/config.json with the configuration manifest:

{

"id": "Extractor",

"name": "Extractor",

"logo": "https://assets.atlan.com/assets/atlan-bot.svg",

"config": {

"properties": {

"input_file": {

"type": "string",

"required": true,

"ui": {

"label": "Input JSON file path",

"placeholder": "for example, extractor-app-input-table.json",

"grid": 8

}

},

"output_file": {

"type": "string",

"required": true,

"ui": {

"label": "Output file path",

"placeholder": "for example, extractor-app-input-table_transformed.json",

"grid": 8

}

}

},

"steps": [

{

"id": "payload",

"properties": [

"input_file",

"output_file"

]

}

]

}

}

What you just built

The configuration manifest defines how your extractor's UI gets generated. The template's frontend components automatically transform this JSON into a working interface:

- Properties definition: Each property (

input_file,output_file) becomes a form field with type validation, required flags, and UI hints. - UI configuration: The

uiobject controls the visual presentation - labels for clarity, placeholders for guidance, and grid sizing for layout. - Steps array: Groups properties into logical sections. Here you have one step called "payload" that contains both file paths.

- JSON-Schema validation: The frontend uses JSON-Schema to validate user input before submission, preventing invalid configurations from reaching your workflow.

- Automatic form generation: The template's form engine reads this manifest and generates the HTML form dynamically - no manual HTML editing needed.

- Configuration flow: When users submit, the form data gets normalized into the exact structure your workflow expects in

workflow_config.

The template's existing frontend components in frontend/static/ automatically use this configuration to generate the user interface. When users submit the form, it creates the normalized configuration payload that gets passed to your workflow.

🎯 All Components Ready! Your extractor now has a complete user interface. You've built every component your application needs. Fire it up and watch it run!

Test your extraction application

The moment of truth! You've built a complete extraction pipeline: workflow orchestrates, activities transform, client handles files safely, and handler connects everything to the web interface. Time to see your file extractor in action with real data.

-

Create test data: Create a file called

extractor-app-input-table.jsonin your project directory:[

{

"Type": "Table",

"Name": "CUSTOMER",

"Display_Name": "Customer",

"Description": "Staging table for invoice data.",

"User_Description": "",

"Owner_Users": "jsmith\njdoe",

"Owner_Groups": "",

"Certificate_Status": "VERIFIED",

"Schema_Name": "PEOPLE",

"Database_Name": "TEST_DB"

},

{

"Type": "Table",

"Name": "CUSTOMER_2",

"Display_Name": "Customer 2",

"Description": "Production table for customer data.",

"User_Description": "",

"Owner_Users": "jsmith\njdoe",

"Owner_Groups": "",

"Certificate_Status": "VERIFIED",

"Schema_Name": "PEOPLE",

"Database_Name": "TEST_DB"

}

] -

Install all required packages: This command downloads and installs all Python libraries and tools your application needs to run locally:

uv sync --all-extras --all-groups -

Download required components: Get Temporal server, database, and other services your application needs to run workflows:

uv run poe download-components -

Start the supporting services: In a new terminal, run the command to start the supporting services like Temporal, database, and other services your application needs to run workflows:

# In a new terminal

uv run poe start-deps -

Start your extractor application: Run the command to start the application.

uv run main.py -

Access the application: Open your web browser and go to

http://localhost:8000to access the interface.

-

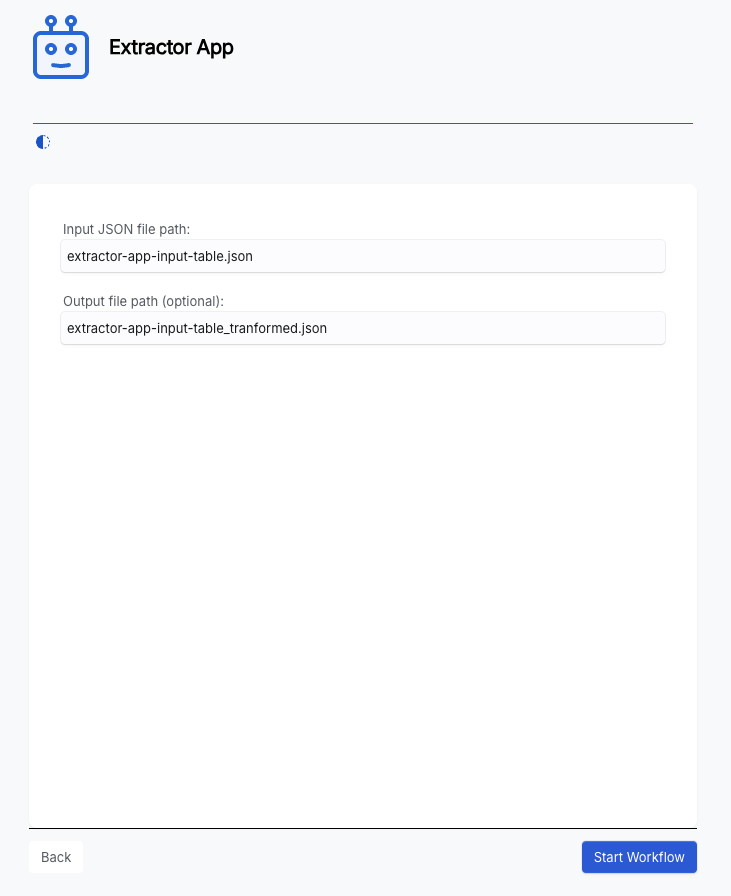

Provide input: When the interface loads, you need to provide the input and output file. Submit the form to start the extraction process.

- Input file: Enter

extractor-app-input-table.json(the file you just created) - Output file: Enter

extractor-app-input-table_transformed.json(where you want to save the transformed data)

- Input file: Enter

-

Confirm submission: After submission, go to

http://localhost:8233to open the workflow dashboard. Verify that the workflow has completed.

-

Check your results: After processing completes, look for the generated

extractor-app-input-table_transformed.jsonfile in your project directory. The file contains the transformed Table records:{"typeName": "Table", "name": "CUSTOMER", "displayName": "Customer", "description": "Staging table for invoice data.", "userDescription": "", "ownerUsers": ["jsmith", "jdoe"], "ownerGroups": [], "certificateStatus": "VERIFIED", "schemaName": "PEOPLE", "databaseName": "TEST_DB"}

{"typeName": "Table", "name": "CUSTOMER_2", "displayName": "Customer 2", "description": "Production table for customer data.", "userDescription": "", "ownerUsers": ["jsmith", "jdoe"], "ownerGroups": [], "certificateStatus": "VERIFIED", "schemaName": "PEOPLE", "databaseName": "TEST_DB"}

Your extractor successfully transforms raw metadata into Atlan's standardized format, including processing owner lists, certificates, and hierarchical relationships.

You did it! You have completed the tutorial series and built a solid foundation in Atlan application development.

What's next

You successfully built a complete local file extraction application using the Atlan Application SDK. Your application demonstrates professional patterns including Temporal workflow orchestration, proper separation of concerns with the handler/client architecture, and reliable file processing with comprehensive error handling.

The generic template you worked with is a GitHub template, which means you can create your own copy and use it as the foundation for your own custom applications.

Continue learning

Deepen your understanding of Atlan application development with these resources:

- Application Architecture: Deep dive into the technical architecture and how Temporal, Dapr, and the SDK work together in your applications

- Sample Applications: Explore more examples to learn different patterns and use cases

- Application Structure: Reference guide for organizing your application code

- Development and Deployment: Common questions about typedefs, deployment processes, and technical requirements

- Platform Integration: Questions about design guidelines, events, and third-party integrations